5 times you should choose NotebookLM over ChatGPT

Here's when you need to ditch the mainstream LLMs

Most people are using the wrong AI for their content operations.

They're wrestling with ChatGPT hallucinations and trying to manage scattered documents across multiple tools. Meanwhile, there's a Google tool that's basically built for exactly what they need — and very few are talking about it.

NotebookLM is the AI system builder's secret weapon.

Here's when you need to ditch the mainstream LLMs

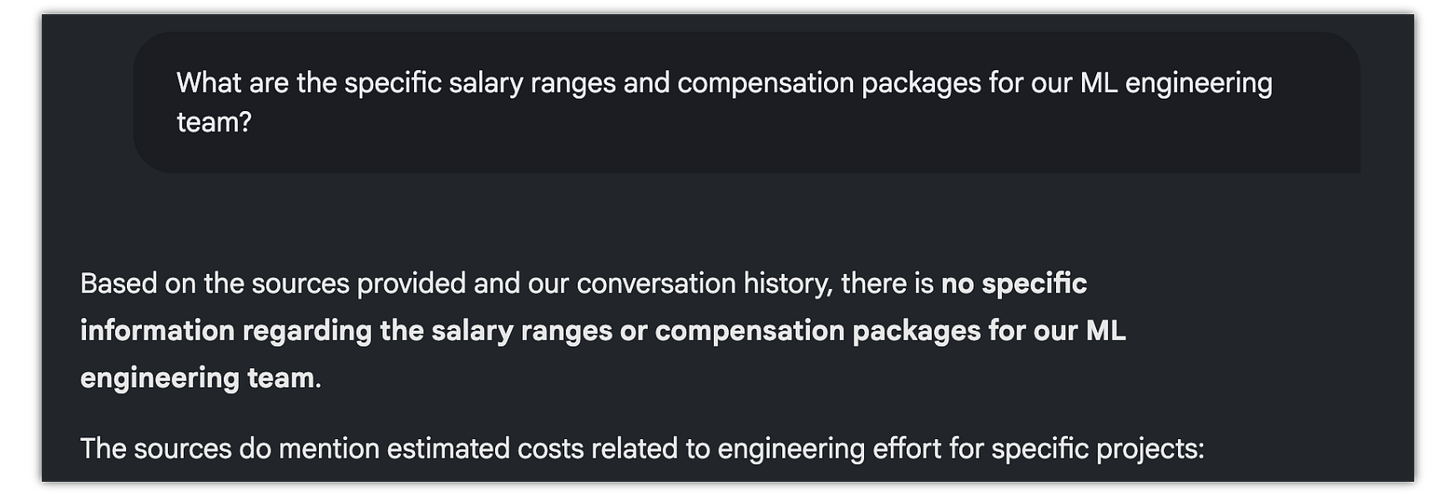

1. When you're building a company knowledge base from 250+ documents

ChatGPT and Claude start to tap out when the number of project knowledge docs get to high. Mistakes start to pop up.

NotebookLM? You can load 300 sources (Enterprise Plan) and build entire company knowledge bases. You can upload PDFs, videos, meeting transcripts, whatever — and create a searchable brain for your entire organization.

Think about it:

Every product manual

Every SOP

Every meeting recording

Every strategy document

Every piece of institutional knowledge

All searchable. All connected. All yours.

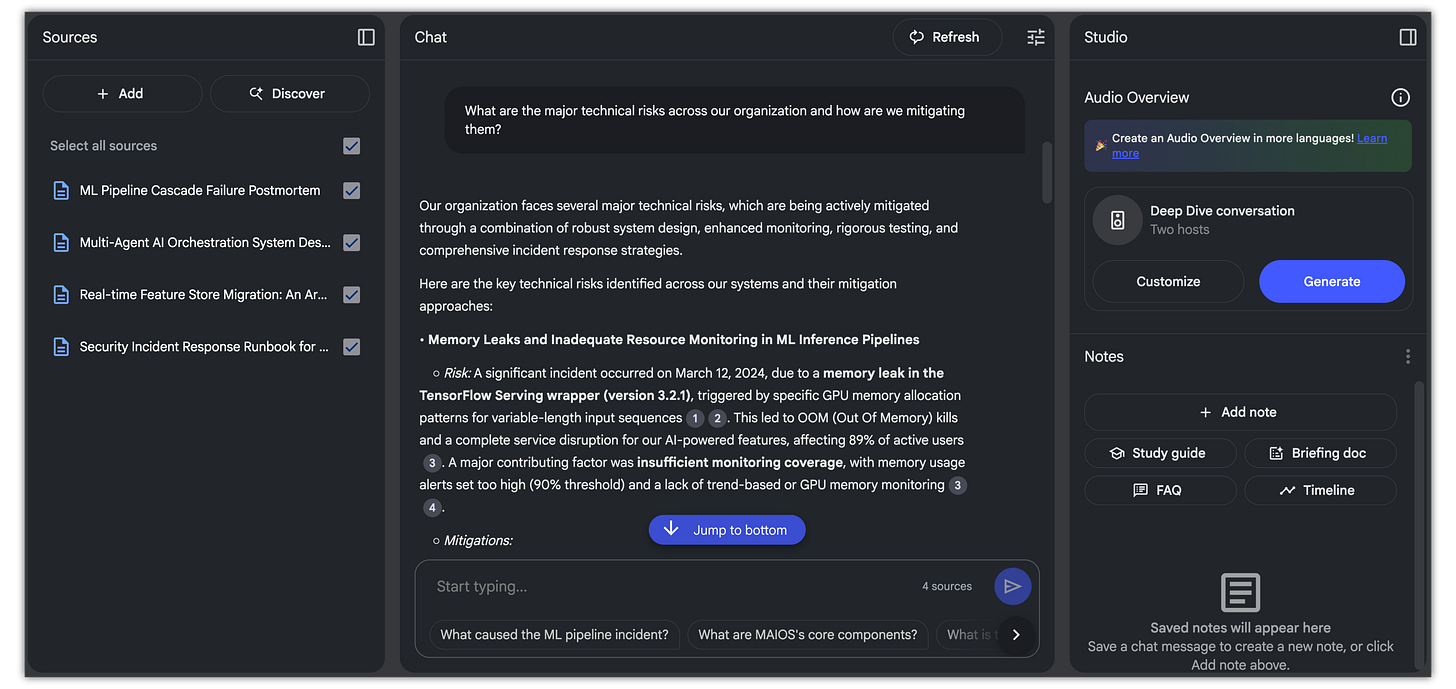

2. When accuracy really matters

Here's the thing about regular LLMs — they can lie a lot.

Not maliciously. They just fill gaps with plausible-sounding nonsense. Super helpful when you're brainstorming. Absolutely terrifying when you're writing technical documentation or analyzing legal contracts.

NotebookLM only pulls from your sources.

If the answer isn't in your uploaded documents, it tells you "I don't know." No creative interpretation. No helpful guessing. Just facts from your actual sources.

3. When you need to analyze patterns across massive document sets

Regular LLMs give you really good summaries. NotebookLM gives you synthesis.

Big difference. You could analyze 10 different institutional agreements and generate tables showing clause-by-clause differences. Or you could pull patterns from years of personal journals. Try doing that with ChatGPT.

The system excels at:

Cross-document pattern recognition

Comparative analysis

Pulling specific clauses from hundreds of pages

Finding connections you'd never spot manually

Basically, it turns your document mountain into actionable intelligence.

4. When you want to learn through audio (and reading is too slow)

Many users tell me this feature alone is worth the price of admission (which is free, by the way).

NotebookLM creates podcast conversations about your uploaded content. Not text-to-speech. Actual conversational podcasts with two AI hosts discussing your material.

The audio is realistic for AI standards — with pauses, "umms," and natural conversation flow — that you really can forget you're listening to AI. Perfect for auditory learners or anyone who needs to absorb complex material while commuting.

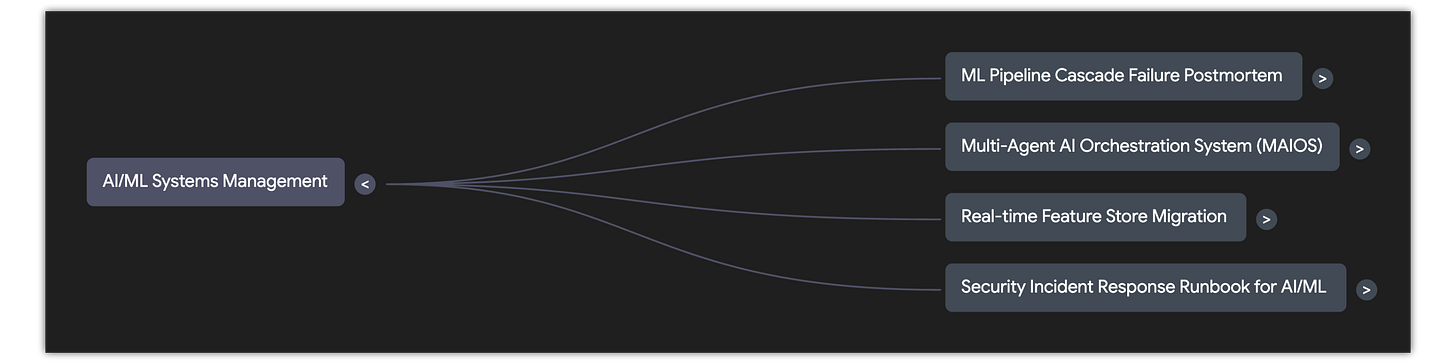

5. When you need visual organization of complex information

The mind map feature (my favorite) automatically categorizes everything from your sources into visual hierarchies.

This isn't some basic flowchart. It's an interactive map of your entire knowledge base that you can navigate and query directly. Click a node, get relevant information. See connections between ideas. Build new frameworks from existing content.

For content strategists organizing client materials? Essential.

For founders mapping out their scattered documentation? Game-changing.

For ghostwriters repurposing content? Money-making.

NotebookLM isn't trying to be ChatGPT.

It's not competing on general knowledge or creative writing or coding assistance.

It's laser-focused on one thing: turning your documents into intelligence.

If you're serious about building content systems, managing knowledge at scale, or creating competitive advantages from information — this is your tool.

Everyone else can keep playing with ChatGPT.

Ready to build real systems?

Start with one focused use case. Upload 10-20 related documents. See what happens when AI actually understands your context.

Then scale from there.

—Alex

Founder of AI Disruptor

At this point, I mostly use NoteBookLM to generate detailed summaries of YouTube videos. I asked Claude for a prompt which would capture every detail of a YouTube instructional video. I load the YouTube video transcript, and execute the Claude prompt which I've saved as a note in QOwnNotes which I keep open.

I get an extremely detailed summary of the video which I save with the URL in Obsidian.

I don't use this for YouTube videos where you have to see the video to understand it - but for any purely audio instructional or informational video, this works great.

Here's the Claude prompt I use:

You are tasked with summarizing a YouTube video while capturing as many points covered in the video as possible. Your goal is to provide a detailed summary that includes specific information such as prompts or instructional procedures described in the video.

Please summarize the content of this video following these guidelines:

1. Begin your summary with a brief introduction of the video's main topic or purpose.

2. Organize the summary into logical sections based on the video's structure or main points discussed.

3. Capture as many points covered in the video as possible. There is no limit on the amount of detail you should include.

4. Pay special attention to any prompts, instructional procedures, or step-by-step guides mentioned in the video. Describe these as precisely as possible, maintaining their original order and structure.

5. Include any relevant examples, analogies, or case studies mentioned in the video to illustrate key points.

6. If the video includes any numerical data, statistics, or specific facts, make sure to include these in your summary.

7. Capture any conclusions, recommendations, or call-to-actions presented in the video.

8. If the video mentions any external resources, websites, or tools, include these in your summary, preferably as links using Markdown link format: [link text](URL)

9. Conclude your summary with a brief overview of the video's main takeaways.

Remember, the goal is to provide a comprehensive summary that could serve as a detailed reference for someone who hasn't watched the video. Don't hesitate to include all relevant information, as there is no limit on the level of detail required.

I have a NotebookLM with 250 sources I handpicked. They represent my PMM experience and the latest from authors I keep in high regard. With the right resource selection and instructions, they are a fantastic way to build, as an example, a PMM course. Then I use notebookLM outlines into a PMM source-dense Claude to "explode" each learning item and write the final copy. I intervene in every single step.

Now my question is: is there aa way to connect notebookLM knowledge and "artifacts" to a GenAI like Claude or GPT?