Here's what nobody is telling you about AI agents in 2025

What's really coming (and how to prepare).

I hope you all had a great new year. I’m back at it here, and we’re kicking off 2025 with AI agents. In the coming months, I will place a big focus on AI agents and agent building, so subscribe and join our community. And don’t fall for all of the crazy hype out there about certain aspects of AI agents. There are a lot of false claims and “agents” that are nothing more than what you can already do with Claude or ChatGPT, but I’m here to help you navigate that.

Everyone's talking about the potential of AI agents in 2025 (and don't get me wrong, it's really significant), but there's a crucial detail that keeps getting overlooked: the gap between current capabilities and practical reliability.

Here's the reality check that most predictions miss: AI agents currently operate at about 80% accuracy (according to Microsoft’s AI CEO). Sounds impressive, right? But here's the thing – for businesses and users to actually trust these systems with meaningful tasks, we need 99% reliability. That's not just a 19% gap – it's the difference between an interesting tech demo and a business-critical tool.

This matters because it completely changes how we should think about AI agents in 2025. While major players like Microsoft, Google, and Amazon are pouring billions into development, they're all facing the same fundamental challenge - making them work reliably enough that you can actually trust them with your business processes.

Think about it this way: Would you trust an assistant who gets things wrong 20% of the time? Probably not. But would you trust one who makes a mistake only 1% of the time, especially if they could handle repetitive tasks across your entire workflow? That's a completely different conversation.

This isn't just abstract speculation. Looking at the infrastructure changes and deployment patterns from major tech companies, we can see they're building for this reality. They're not just scaling up existing models – they're fundamentally rethinking how AI agents operate, focusing on reliability over raw capabilities.

For you, this means two things:

The AI agent revolution is real, but its timeline looks different than most predict

There's actually a huge opportunity in understanding this gap between hype and reality

While everyone else is chasing the hype, you can focus on what really matters: building sustainable, reliable AI workflows that actually deliver value.

The accuracy problem

Imagine you're writing an important email. Your AI agent helpfully drafts it, schedules a follow-up meeting, and updates your project timeline. Sounds perfect, right? Except... about once every five emails, something goes wrong. Maybe it schedules the meeting on a holiday, or misinterprets a crucial project detail.

This is the 80% accuracy problem in real terms. And it's the key challenge that separates today's AI agents from what we need in 2025.

Here's what nobody else is telling you about this gap:

The reality is more nuanced than just "making AI better." Current AI agents can handle simple tasks remarkably well – data extraction, basic scheduling, routine analyses. But the moment you need them to handle complex workflows or make interconnected decisions, that 80% accuracy becomes a serious liability.

I've been tracking the technical indicators from major AI companies, and here's what's actually happening behind the scenes:

They're not just working on making agents smarter – they're fundamentally rethinking how they handle uncertainty and verification

The focus has shifted from raw capabilities to reliability engineering

Companies like Microsoft and Google are building entirely new infrastructure just to bridge this trust gap

For your business, brand, or use cases, this creates a fascinating timeline:

First half of 2025: Early AI agents excel at specific, contained tasks

Use them for data processing

Document analysis

Basic workflow automation

But keep humans in the loop for verification

Second half of 2025-2026: The reliability breakthrough

Major companies solve specific domain reliability

Enterprise-grade agents emerge for narrow use cases

Early adopters gain significant advantages in specific areas

Here's an opportunity: While everyone else waits for perfect AI agents, you can start building expertise with current tools in controlled, low-risk areas. This gives you two major advantages:

You'll understand the practical limitations and opportunities before your competitors

You'll be perfectly positioned when reliability catches up to capability

Think of it like learning to drive. You don't start on the highway – you practice in a parking lot. Similarly, the best way to approach AI agents is to start with simple, contained tasks where that 20% error rate won't hurt you.

Companies are wasting a lot of money to deploy AI agents across their entire operation at once. Don't make that mistake. Instead, focus on areas where:

Errors are easily caught and corrected

The stakes are lower

The potential efficiency gains are highest

This way, you're building real expertise while managing real risks.

Operator agent will change how we use AI

Agents are coming in early 2025, and it's going to completely change how you interact with AI.

Your practical guide to what's actually coming

Something I've learned about "AI agents" from various companies: Most of what's being marketed as "AI agents" right now is just cleverly chained prompts. And you know what? That's actually great news for you.

Why? Because if you've been learning how to write effective prompts, you're already building the exact skills you'll need when true AI agents arrive. It's like learning to code in basic HTML before full web development frameworks existed – you're understanding the fundamentals that everything else will build upon.

Current reality:

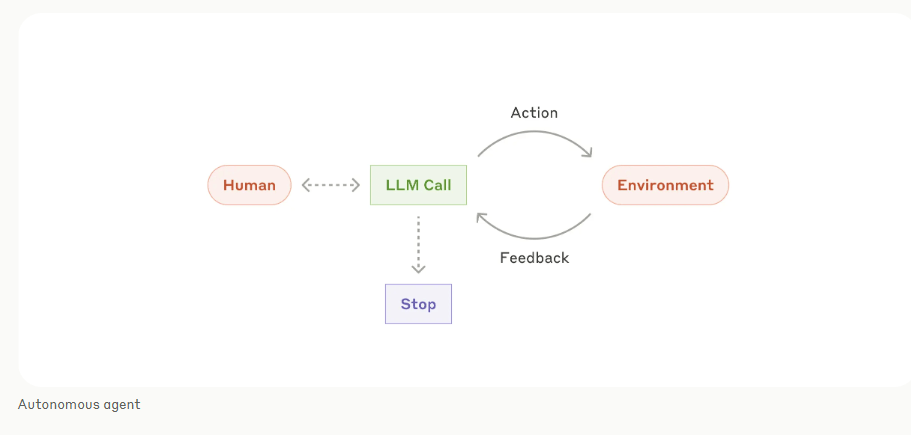

Companies chain together LLM responses

They add basic error handling

They wrap it in a nice UI

They call it an "agent"

But real AI agents in 2025 will be fundamentally different. Based on the infrastructure changes from major tech companies, here's what's actually coming:

Multi-step reasoning without human intervention

Not just chained prompts

Actually understanding task context

Handling unexpected scenarios

Cross-system operation

Working across multiple tools

Managing API connections

Maintaining context between tasks

Learning from interactions

Adapting to your workflow

Improving from feedback

Building institutional knowledge

The biggest opportunities aren't where most people are looking. Here are the areas where AI agents will create real value first:

Process Documentation & Execution

Converting SOPs into executable workflows

Maintaining and updating procedures

Catching process inconsistencies

Data Analysis & Reporting

Automated insight generation

Cross-system data correlation

Continuous monitoring and alerts

Customer Service Enhancement

Not replacing humans, but amplifying them

Handling routine cases autonomously

Escalating complex issues intelligently

Here's where it gets interesting. While everyone's waiting for "true" AI agents, you can start building your advantage now. The skills you develop with prompt engineering today are the exact same skills you'll need to:

Direct AI agents effectively

Customize their behavior

Debug their outputs

Optimize their performance

Think of it this way: Current prompt engineering is like learning to give clear instructions to a very capable but literal-minded intern. Future AI agents will be like that intern after they've gained experience – they'll need less explicit instruction, but the fundamental communication principles remain the same.

This is why I spend so much time teaching prompt engineering. It's not just about making ChatGPT or Claude work better today – it's about building the skills you'll need to thrive in the agentic era.

The companies and individuals that succeed with AI agents in 2025 won't be the ones who waited for perfect technology. They'll be the ones who:

Built expertise with current tools

Developed clear processes for AI interaction

Learned how to effectively direct AI systems

Created frameworks for AI integration

The secret goldmine: AI royalties explained

Remember when everyone rushed to create ChatGPT prompts last year? That was just the warmup. What's coming with AI royalties is something entirely different – and much more interesting.

Here's what I've pieced together so far: AI royalties are about turning your expertise into reusable "skills" that AI agents can execute. But unlike prompts that anyone can copy-paste, these are structured, verifiable procedures that earn you money every time they're used.

Think of it like this: Instead of writing a guide about how to do something, you're creating an actual digital workflow that others can plug into their AI agents. And here's the key part – you get paid every time someone uses it.

The implications here are fascinating. We're moving from:

One-time content creation → Perpetual value generation

Written procedures → Executable workflows

Static knowledge → Dynamic capabilities

For creators and experts, this is a complete paradigm shift. Your knowledge isn't just content anymore – it's becoming functional code that AI agents can execute.

Here's what successful early movers are doing:

Documenting their unique workflows in detail

Breaking down complex processes into discrete steps

Building libraries of specialized procedures

Testing and refining their methods for consistency

The key is understanding that AI agents will need structured, reliable processes to achieve that 99% reliability we talked about earlier. Your expertise, properly formatted, becomes part of the solution.

Looking ahead: Your action plan

When I talk to people about AI agents, they usually fall into two camps: those waiting for the "perfect" agent to arrive, and those frantically trying to implement everything at once. Both are missing the real opportunity.

The winners in 2025 won't be the ones who waited, nor the ones who rushed. They'll be the ones who built methodically, experimented constantly, and stayed focused on real value.

I've seen many companies try to boil the ocean. Start small, but be systematic. Your goal isn't perfect automation – it's learning how these systems actually work in your context.

Let me be clear about something I believe: 2025 isn't about AI agents taking over everything. It's about augmenting human capability in specific, valuable ways. Your goal isn't to automate yourself out of a job – it's to amplify your impact. Or in other words, it’s your chance to scale up everything you do.

In 2025, I will place a big focus on AI agents and agent building, so make sure to subscribe and join our community.

Hi Alex, any updates to your thoughts since the current releases?

Thanks for this thoughtful analysis, Alex. As a historian, I find fascinating parallels between your observations and previous tech transitions. Your point about starting small and building expertise gradually seems especially wise - it's the kind of measured approach that often gets lost in the AI hype cycle. Really appreciate you sharing these insights about the current state of AI agents and their practical limitations.