A clear guide to understanding AI agents

Using Google's research to explore how agents think and act.

If you've been following AI developments, you definitely know about AI agents by now. Google dropped a great white paper back in September explaining agents, but for some reason it is now just circulating. It came across my screen, so I thought it would be a great reference to use for a guide on the topic.

When most people interact with AI today, they experience a simple input-output system:

Ask a question

Get a response

Maybe use some basic integrations

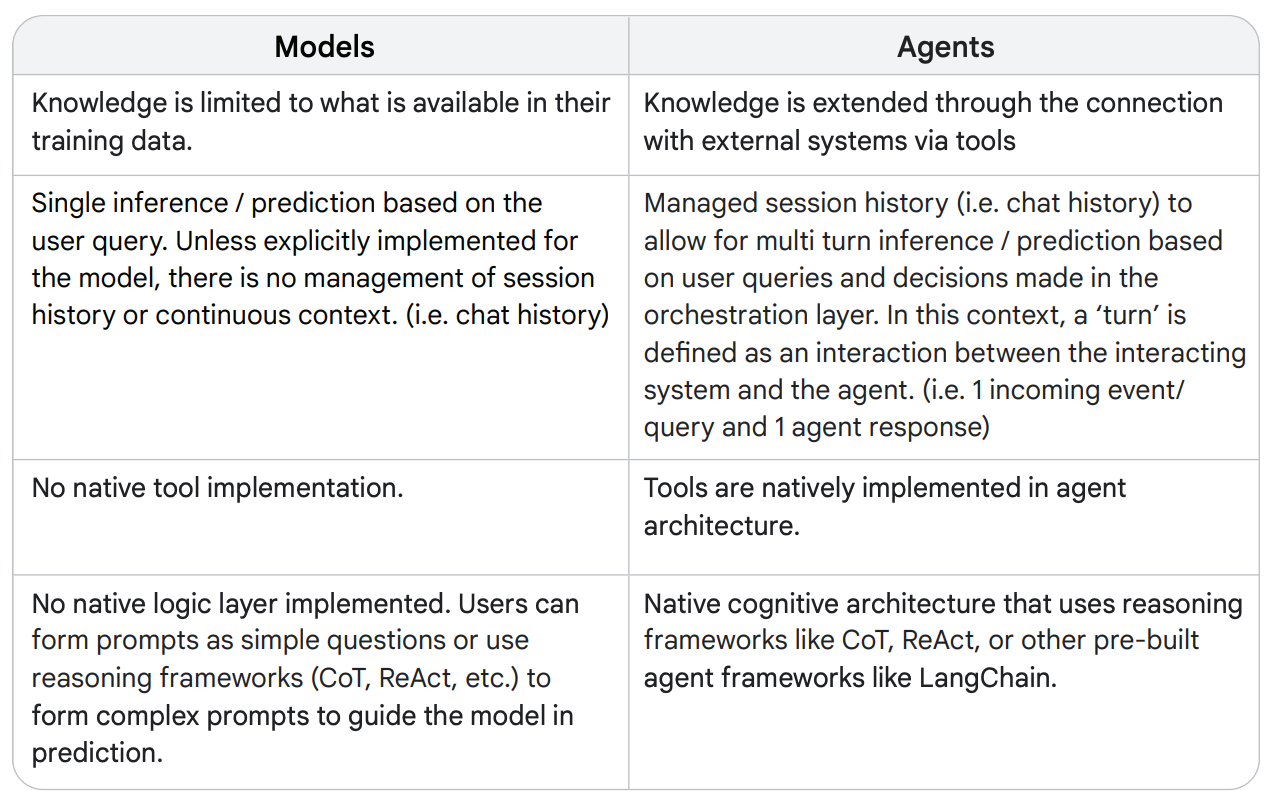

AI agents represent something fundamentally different. Instead of just responding to prompts, they can understand goals, create plans, and execute multiple steps to achieve those goals.

Looking at Google's white paper titled “Agents,“ we can see that the key difference lies in how agents approach tasks. While traditional AI systems follow fixed patterns, agents can:

Break down complex goals into manageable steps

Choose appropriate tools for different situations

Adapt their approach based on results

Maintain context across multiple interactions

The breakthrough in Google's research isn't about more powerful models or bigger datasets. Instead, they've mapped out something more fundamental: a cognitive architecture that allows AI to operate with more agency.

(This is the type of stuff that will help us fix the current accuracy problem I worte about last week)

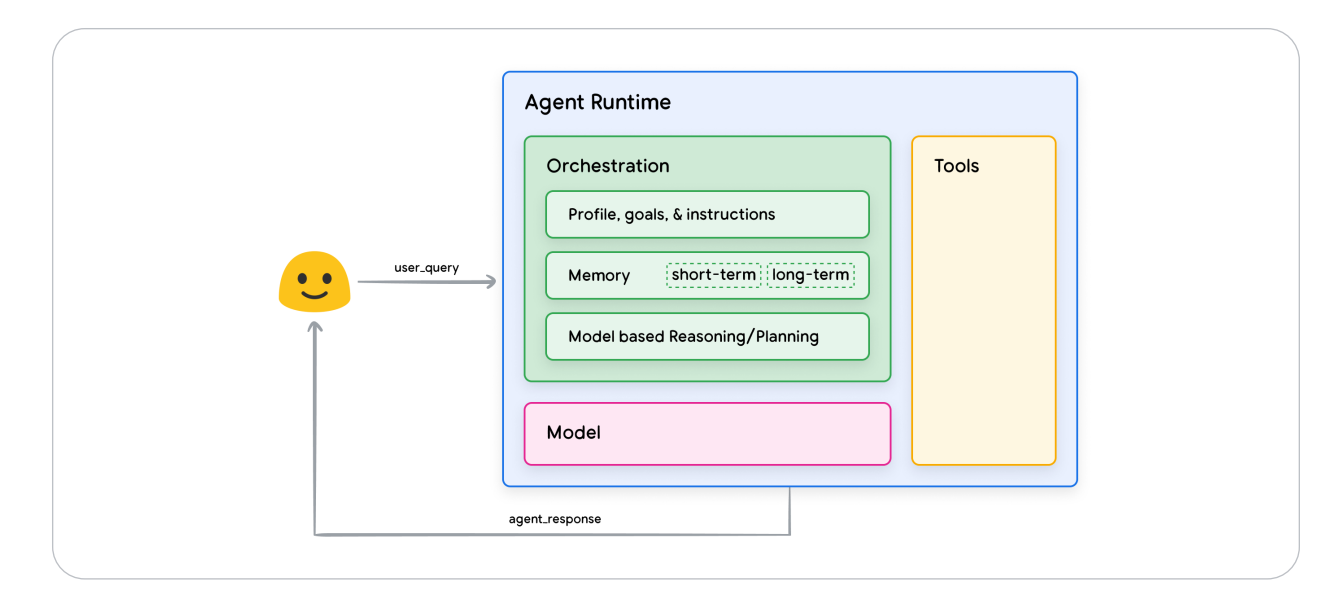

Their research shows three critical components that make this possible:

A sophisticated reasoning framework

The ability to use various tools effectively

An orchestration layer that ties everything together

This architecture opens up possibilities that simply weren't feasible with traditional AI approaches.

In its most fundamental form, a Generative AI agent can be defined as an application that attempts to achieve a goal by observing the world and acting upon it using the tools that it has at its disposal. Agents are autonomous and can act independently of human intervention, especially when provided with proper goals or objectives they are meant to achieve. Agents can also be proactive in their approach to reaching their goals. Even in the absence of explicit instruction sets from a human, an agent can reason about what it should do next to achieve its ultimate goal.

- Google white paper (“Agents")

Let's start with what makes agents different

Jumping into AI agents can feel overwhelming. With terms like "orchestration layers," "extensions," and "cognitive architectures" being thrown around, it's easy to get lost in the complexity.

Let's break this down into something clearer.

Looking at Google's research, one thing becomes crystal clear: most of what we call "AI" today is just scratching the surface. Think about how you currently interact with AI tools - they're essentially smart pattern matchers that respond to your inputs.

But agents are different. A true agent actively:

Observes what's happening

Makes decisions about what to do

Takes actions to achieve specific goals

Adjusts its approach based on results

The three pieces that actually matter

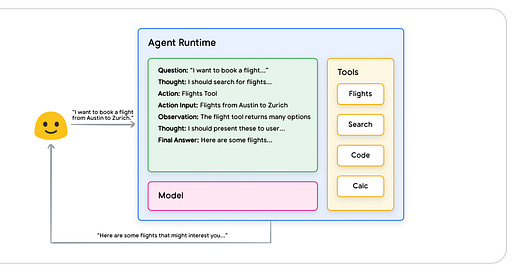

Google's research reveals three fundamental components that transform an AI system into a true agent:

The Model

Acts as the brain of the system

Handles reasoning and decision-making

Works with various types of inputs and tasks

The Tools

Extensions: Direct connections to external services

Functions: Ways to execute specific tasks

Data Stores: Access to knowledge and information

The Orchestration Layer

Manages the flow of decisions and actions

Determines when to use different tools

Maintains focus on the overall goal

A simple way to think about complex agents

Think of an agent like a skilled professional handling a complex task. They don't just follow a script - they:

Understand the goal

Plan necessary steps

Choose appropriate tools

Monitor progress

Adjust when needed

This mirrors how Google's agent architecture operates, making it easier to understand the leap from traditional AI to true agents.

Google's white paper also gives us a framework to evaluate AI systems.

Here are the key questions to ask when looking at any AI tool:

Does it maintain context across interactions?

Can it break down complex goals into steps?

Does it know when to use different tools?

Can it adjust its approach based on new information?